Abstract

- A small-sized type of volatile computer memory that provides high-speed data access to a CPU. 10-100 times faster than accessing accessing data from Main Memory. Built with SRAM

CPU Cache size calculation

Direct Mapped Cache: Cache size = (Number of cache blocks) × (Block size)

Set Associative Cache: Cache size = (Number of sets) × (Associativity) × (Block size)

Fully Associative Cache: Cache size = (Number of cache blocks) × (Block size)

Differences between L1, L2, and L3 CPU Cache?

L1 Cache is known as primary cache, runs at the same speed as the CPU.

L2 Cache, also called the secondary cache, is accessed if the CPU cannot find the data in L1 Cache. It can be up to 10 times slower than L1 cache.

L3 Cache is known as shared cache, because it is shared between all the cores of the CPU. Accessed if data isn’t found in L2 cache. L3 Cache is still twice as fast as DRAM.

Cache Line

Spatial locality

A cache line typically contains one or more words. When the CPU fetches data from memory, it retrieves an entire cache line, not just the specific bytes needed immediately. This takes advantage of spatial locality.

How big should a cache line be?

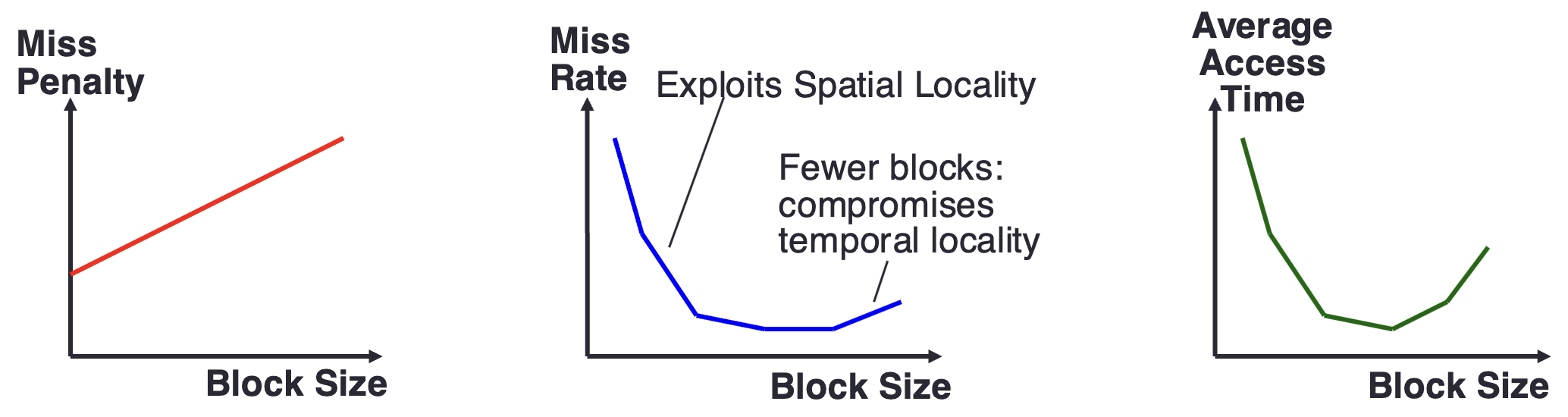

The larger the cache line, the better we can take advantage of spatial locality, since we have more surrounding data cached in the cpu cache.

However, this brings a larger miss penalty, as it takes longer to transfer one cache line to the CPU cache.

Furthermore, CPU cache has a very limited size. The larger the cache line, the fewer cache lines can be loaded into the CPU cache. Consequently, the cached data tends to be more concentrated, and the miss rate will increase.

Therefore, we need to find a sweet spot in the cache line size to maximise spatial locality and reduce the miss penalty and miss rate.